With AI chatbots now prompting us in virtually every app and online experience, it’s becoming more common for people to converse with these tools, and even feel a level of friendship with their favorite AI companions over time.

But that’s a risky proposition. What happens when people start to rely on AI chatbots for companionship, even relationships, and then those chatbot tools are deactivated, or they lose connection in other ways?

What are the societal impacts of virtual interactions, and how will that impact our broader communal process?

These are key questions, which, in large part, are seemingly being overlooked in the name of progress.

But recently, the Stanford Deliberative Democracy Lab has conducted a range of surveys, in conjunction with Meta, to get a sense of how people feel about the impact of AI interaction, and what limits should be implemented in AI engagement (if any).

As per Stanford:

“For example, how human should AI chatbots be? What are users’ preferences when interacting with AI chatbots? And, which human traits should be off-limits for AI chatbots? Furthermore, for some users, part of the appeal of AI chatbots lies in its unpredictability or sometimes risky responses. But how much is too much? Should AI chatbots prioritize originality or predictability to avoid offense?”

To get a better sense of the general response to these questions, which could also help to guide Meta’s AI development plans, the Democracy Lab recently surveyed 1, 545 participants from four countries (Brazil, Germany, Spain, and the United States) to get their thoughts on some of these concerns.

You can check out the full report here, but in this post, we’ll take a look at some of the key notes.

First off, the study shows that, in general, most people see potential efficiency benefits in AI use, but less so in companionship.

This is an interesting overview of the general pulse of AI response, across a range of key elements.

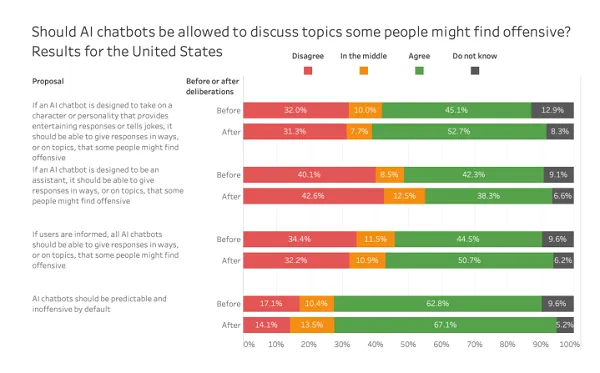

The study then asked more specific questions about AI companions, and what limits should be placed on their use.

In this query, most participants indicated that they’re okay with chatbots addressing potentially offensive topics, though around 40% were against it (or in the middle).

Which is interesting considering the broader discussion of free speech in the modern media. It would seem, given the focus on such, that most people would view this as a bigger concern, but the split here indicates that there’s no true consensus on what chatbots should be able to address.

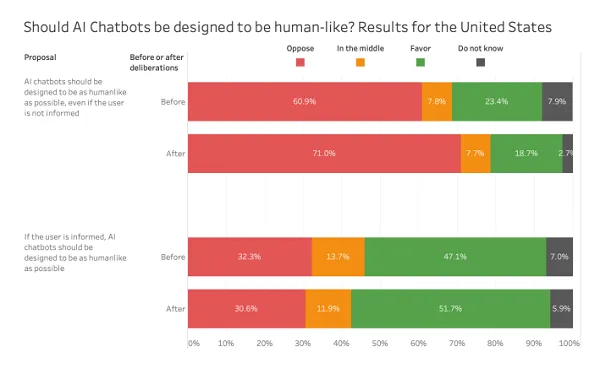

Participants were also asked whether they think that AI chatbots should be designed to replicate humans.

So there’s a significant level of concern about AI chatbots playing a human-like role, especially if users are not informed that they’re engaging with an AI bot.

Which is interesting within the context of Meta’s plan to unleash an army of AI bot profiles across its apps, and have them engage on Facebook and IG like they’re real people. Meta hasn’t provided any specific info on how this would work as yet, nor what kind of disclosures it plans to display for AI bot profiles. But the responses here would suggest that people want to be clearly informed of such, as opposed to trying to pass these off as real people.

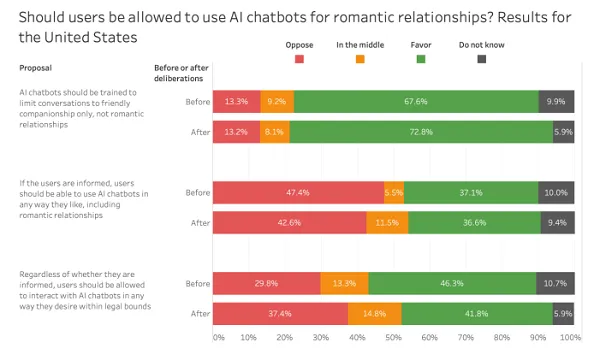

Also, people don’t seem particularly comfortable with the idea of AI chatbots as romantic companions:

The results here show that the vast majority of people are in favor of restrictions on AI interactions, so that users (ideally) don’t develop romantic relationships with bots, while there’s a fairly even split of people for and against the idea that people can interact with AI chatbots however they want “within legal bounds.”

This is a particularly risky area, as noted, within which we simply don’t have enough research on as yet to make a call as to the mental health benefits or impacts of such. It seems like this could be a pathway to harm, but maybe, romantic involvement with an AI could be beneficial in many cases, in addressing loneliness and isolation.

But it is something that needs adequate study, and should not be allowed, or promoted by default.

There’s a heap more insight in the full report, which you can access here, raising some key questions about the development of AI, and our increasing reliance on AI bots.

Which is only going to grow, and as such, we need more research and community insight into these elements to make more informed choices about AI development.