As highlighted by Meta CEO Mark Zuckerberg in a recent overview of the impact of AI, Meta is increasingly relying on AI-powered systems for more aspects of its internal development and management, including coding, ad targeting, risk assessment, and more.

And that could soon become an even bigger factor, with Meta reportedly planning to use AI for up to 90% of all of its risk assessments across Facebook and Instagram, including all product development and rule changes.

As reported by NPR:

“For years, when Meta launched new features for Instagram, WhatsApp and Facebook, teams of reviewers evaluated possible risks: Could it violate users’ privacy? Could it cause harm to minors? Could it worsen the spread of misleading or toxic content? Until recently, what are known inside Meta as privacy and integrity reviews were conducted almost entirely by human evaluators, but now, according to internal company documents obtained by NPR, up to 90% of all risk assessments will soon be automated.”

Which seems potentially problematic, putting a lot of trust in machines to protect users from some of the worst aspects of online interaction.

But Meta is confident that its AI systems can handle such tasks, including moderation, which it showcased in its Transparency Report for Q1, which it published last week.

Earlier in the year, Meta announced that it would be changing its approach to “less severe” policy violations, with a view to reducing the amount of enforcement mistakes and restrictions.

In changing that approach, Meta says that when it finds that its automated systems are making too many mistakes, it’s now deactivating those systems entirely as it works to improve them, while it’s also:

“…getting rid of most [content] demotions and requiring greater confidence that the content violates for the rest. And we’re going to tune our systems to require a much higher degree of confidence before a piece of content is taken down.”

So, essentially, Meta’s refining its automated detection systems to ensure that they don’t remove posts too hastily. And Meta says that, thus far, this has been a success, resulting in a 50% reduction in rule enforcement mistakes.

Which is seemingly a positive, but then again, a reduction in mistakes can also mean that more violative content is being displayed to users in its apps.

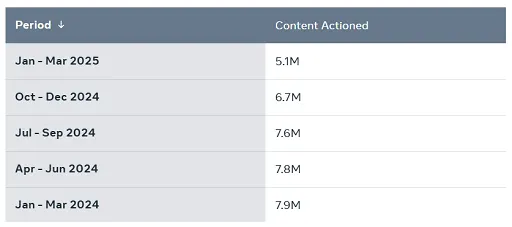

Which was also reflected in its enforcement data:

As you can see in this chart, Meta’s automated detection of bullying and harassment on Facebook declined by 12% in Q1, which means that more of that content was getting through, because of Meta’s change in approach.

Which, on a chart like this, doesn’t look like a significant impact. But in raw numbers, that’s a variance of millions of violative posts that Meta’s taking faster action on, and millions of harmful comments that are being shown to users in its apps as a result of this change.

The impact, then, could be significant, but Meta’s looking to put more reliance on AI systems to understand and enforce these rules in future, in order to maximize its efforts on this front.

Will that work? Well, we don’t know as yet, and this is just one aspect of how Meta’s looking to integrate AI to assess and action its various rules and policies, to better protect its billions of users.

As noted, Zuckerberg has also flagged that “sometime in the next 12 to 18 months,” most of Meta’s evolving code base will be written by AI.

That’s a more logical application of AI processes, in that they can replicate code by ingesting vast amounts of data, then providing assessments based on logical matches.

But when you’re talking about rules and policies, and things that could have a big impact on how users experience each app, that seems like a more risky use of AI tools.

In response to NPR, Meta said that product risk review changes will still be overseen by humans, and that only “low-risk decisions” are being automated. But even so, it’s a window into the potential future expansion of AI, where automated systems are being relied upon more and more to dictate actual human experiences.

Is that a better way forward on these elements?

Maybe it will end up being so, but it still seems like a significant risk to take, when we’re talking about such a huge scale of potential impacts, if and when they make mistakes.