Meta says that changes to its moderation and enforcement approach, including the shift to a Community Notes, are working, with its latest transparency reports showing that it saw a 50% reduction in enforcement mistakes in the United States.

This was one of the key focus elements of its switch, in ensuring that more speech is allowed, and less is restricted due to Meta’s rules. Community Notes can help in this regard by enabling Meta to ease back on its own enforcement, in favor of allowing the community to decide what should and should not be allowed.

And that appears to be having some effect, though the flip side of that could be that more misinformation, in particular, is being allowed to proliferate in Meta’s apps, as a reduction in mistakes could also reflect a reduction in enforcement overall.

This is one of several notes from Meta’s latest round of moderation performance data, which also includes info on content removed for rule violations, government information requests, widely viewed content, and more.

You can view all of Meta’s latest insights here, but in this post, we’ll take a look at some of the key notes.

Looking at Community Notes specifically, Meta says that it’s now added the ability to write notes on Reels, as well as on replies in Threads, while it’s also added the option to request a community note, as it continues to expand its process.

And the Community Notes approach clearly does have merit, in enabling users to have input into what’s allowed, and not, in each app. But again, it is also worth noting that any benefits of Community Notes could also be countered by a relative expansion in unreported issues that cannot be tracked.

For example, research has shown that the appearance of a Community Note on a post can significantly reduce the distribution of false claims made in that update. But that’s only relevant if a note is shown, and a key flaw in the Community Notes system on X is that 85% of all Community Notes are never displayed to X users.

The need for agreement from users of opposing political viewpoints means that the majority of notes never see he light of day, because on many of the most divisive political issues, that agreement will never be reached.

So if a note isn’t displayed, it can’t reduce a post’s reach. The same goes for complaints about mistakes, people can’t complain about a mistake if a note is never shown. Which could mean that a lot of misinformation is being allowed to proliferate, despite these seemingly positive indicators.

So, basically, I don’t know that a 50% reduction in enforcement mistakes is necessarily the positive marker that Meta is presenting it as.

In terms of case-specific enforcement, Meta’s data shows that there’s been an increase in cases of nudity/sexual content on Facebook of late:

While content removed under its “dangerous organizations” policy has increased in IG (Meta says this was due to a bug which is being addressed):

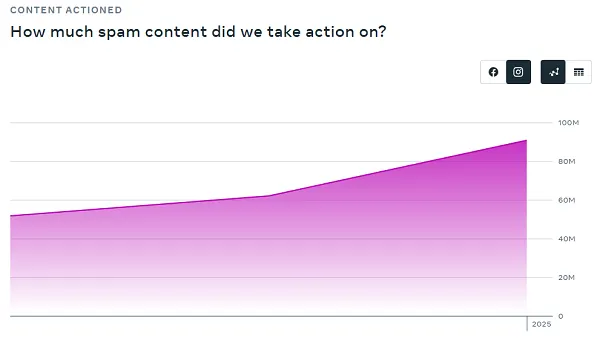

More spam is also being removed on Instagram:

And concerningly, suicide, self-injury and eating disorder content has also increased:

Some of these trends are due to improvements made to Meta’s detection technology, which is increasingly incorporating large language models to refine detection:

“Early tests suggest that LLMs can perform better than existing machine learning models, or enhance existing ones. Upon further testing, we are beginning to see LLMs operating beyond that of human performance for select policy areas.”

While others could be indicative of broader concerns in these areas.

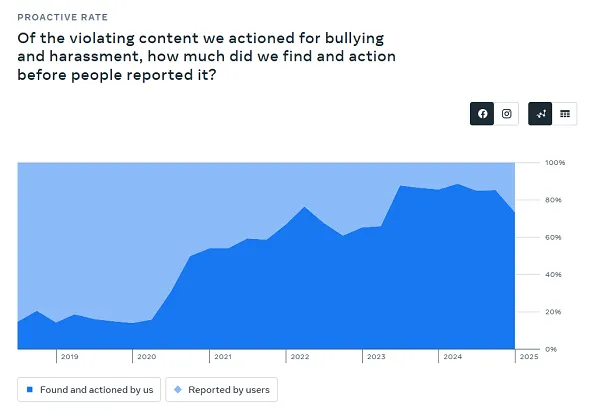

Of equal concern, however, in regards to the implementation of its new moderation approach, automated detection of bullying and harassment declined by 12%.

Could that be because Meta’s allowing more of this happen by letting users decide what should and should not be reported?

To be fair, Meta has also noted that some of this would be attributable to it scaling back its automated detection in areas of high false positives, in order to refine and improve its models. So there could be a couple of reasons for this, but it does suggest that it could be allowing more concerning content to get through as a result.

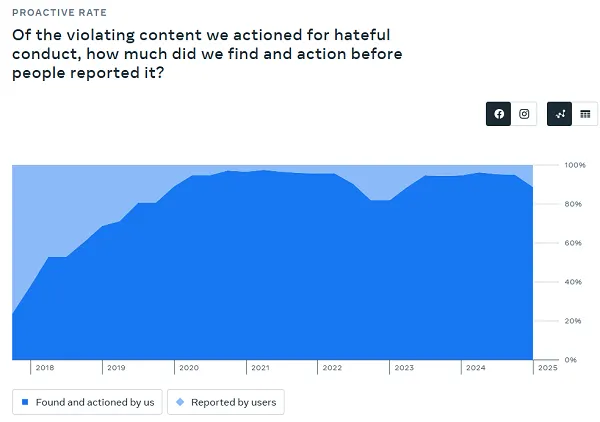

Meta’s also reported a 7% reduction in proactive detection of hateful conduct:

So these incidents are still happening, it’s just that Meta’s proactive detection is now seemingly less sensitive to such, with Meta also opting to let Community Notes pick up the slack on such reports.

Is that an improvement? I don’t know, but it’s another marker that points to the impact of this change in approach, which could be leading to more incidents of harm in its apps. And when you also consider the massive scale of Meta’s platforms, a small percentage decline here is actually thousands, or millions more incidents.

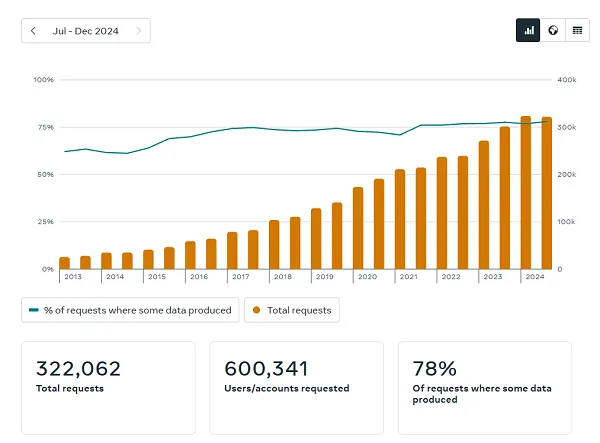

In terms of government information requests, Meta’s response numbers remained relatively steady in comparison to its last report:

Meta says that India was the top requester in this respect (+3.8% increase in requests this quarter), followed by the United States, Brazil and Germany.

Also, Meta says that fake accounts represent approximately 3% of its worldwide monthly active users on Facebook. Meta must be more confident in its fake account detection processes, because it’s generally reported the industry standard 5% on this measure. But now, it seems to believe that it’s catching out more fakes.

Individual user experiences will vary.

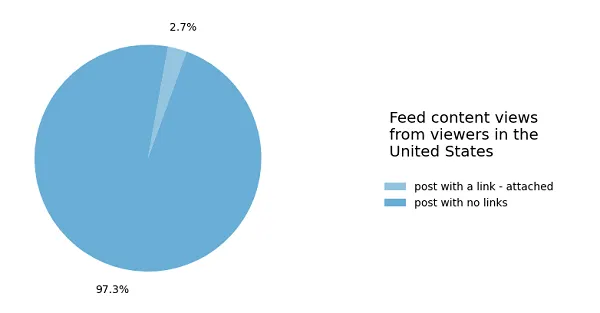

In terms of widely viewed content, this chart continues to look sad for publishers and those looking to drive traffic from Facebook.

As you can see, 97.3% of post views on Facebook in the US during Q1 2025 did not include a link to a source outside of that app.

Though that’s actually a slight improvement on Meta’s last report (+0.6% on link posts). But if you were hoping that Meta’s move to allow more publisher content to gain more reach in the app once again, as a result of it relaxing its rules around political discussion, the numbers show that’s not the case.

Anecdotally, however, some publications have reported an increase in referral traffic from Facebook this year.

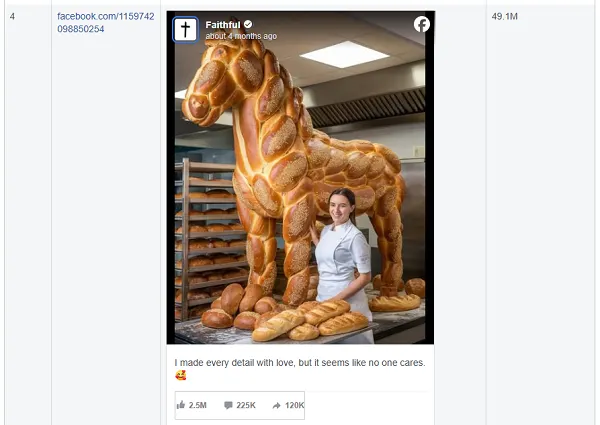

Oh, and if you were wondering what is getting traction on Facebook, AI slop is still performing ridiculously well:

That and the usual mix of supermarket gossip magazine-style celebrity updates and viral posts.

So is Facebook getting better as a result of the shift to Community Notes?

Well, it depends on the metrics you’re using. Some data here suggests that the change in moderation approach is providing benefits, but it’s the omission of data that’s likely just as relevant in those stats.

Overall, however, there have been some concerning shifts in actions taken on certain elements, which could suggest that Meta’s allowing more harmful content to spread via its apps.

We don’t know, however, because these figures only show results, not missed opportunities, and without the full context, it’s impossible to say whether its change is having an overall positive impact.

You can check out all of Meta’s latest transparency reports here.