Yeah, this seems like it’s going to be a problem in future, though maybe that’s considered the cost of progress?

Last week, Common Sense Media published a new report which found that 72% of U.S. teens have already used an AI companion, with many of them now conducting regular social interactions with their chosen virtual friends.

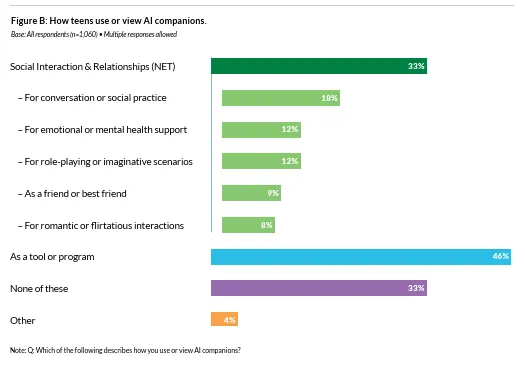

The study is based on a survey of 1,060 teens, so it’s intended as an indicative measure, not as a definitive overview of AI usage. But the trends do point to some potentially significant concerns, particularly as platforms now look to introduce AI bots that can also serve as romantic partners in some capacity.

First off, as noted, the data shows that 72% of teens have tried AI companions, and 52% of them have become regular users of these bots.

What’s worth noting here is that AI bots aren’t anywhere near where they’re likely to be in a few more years’ time, with the tech companies investing billions of dollars into advancing their AI bots to make them more relatable, more conversational, and better emulators of real human engagement.

But they’re not. These are bots, which respond to conversational cues based on the context that they have available, and whatever weighting system each company puts into their back-end process. So they’re not an accurate simulation of actual human interaction, and they never will be, due to the real mental and physical connection enabled through such.

Yet, we’re moving towards a future where this is going to become a more viable replacement for actual civic engagement. But what if a bot gets changed, gets infected with harmful code, gets hacked, shut down, etc.?

The broader implications of enabling, and encouraging such connection, are not yet known, in terms of the mental health impacts that could come as a result.

But we’re moving forward anyway, with the data showing that 33% of teens already use AI companions for social interaction and relationships.

Of course, some of this may well end up being highly beneficial, in varying contexts.

For example, the ability to ask questions that you may not be comfortable saying to another person could be a big help, with the survey data showing that 18% of AI companion users refer to the tools for advice.

Nonjudgmental interaction has clear benefits, while 39% of AI companion users have also transferred social skills that they’ve practiced with bots over to real-life situations (notably, 45% of females have done this, versus 34% of male users).

So there’s definitely going to be benefits. But like social media before it, the question is whether those positives will end up outweighing the potential negatives of over-reliance on non-human entities for traditionally human engagement.

31% of survey participants indicated that they find conversations with AI companions as satisfying or more satisfying than those with real-life friends, while 33% have chosen AI over humans for certain conversations.

As noted, the fact that these bots can be skewed to answer based on ideological lines is a concern in this respect, as is the tendency for AI tools to “hallucinate” and make incorrect assumptions in their responses, which they then state as fact. That could lead youngsters down the wrong path, which could then lead to potential harm, while again, the shift to AI companions as romantic partners opens up even more questions about the future of relationships.

It seems inevitable that this is going to become a more common usage for AI tools, that our budding relationships with human simulators will lead to more people looking to take those understanding, non-judgmental relationships to another level. Real people will never understand you like your algorithmically-aligned AI bot can, and that could actually end up exacerbating the loneliness epidemic, as opposed to addressing it, as some have suggested.

And if young people are learning these new relationship behavors in their formative years, what does that do for their future concept of human connection, if indeed they feel they need that?

And they do need it. Centuries of studies have underlined the importance of human connection and community, and the need to have real relationships to help shape your understanding perspective. AI bots may be able to simulate some of that, but actual physical connection is also important, as is human proximity, real world participation, etc.

We’re steadily moving away from this over time, and you could argue, already, that increasing rates of severe loneliness, which the WHO has declared a “pressing global health threat,” are already having major health impacts.

Indeed, studies have shown that loneliness is associated with a 50% increased risk of developing dementia and a 30% increased risk of incident coronary artery disease or stroke.

Will AI bots help that? And if not, why are we pushing them so hard? Why is every app now trying to make you chat with these non-real entities, and share your deepest secrets with their evolving AI tools?

Is this more beneficial to society, or to the big tech platforms that are building these AI models?

If you lean towards the latter conclusion, then progress is seemingly the bigger focus, just as it was with social media before it. AI providers are already pushing for the European Union to relax its restrictions on AI development, while the looming AI development race between nations is also increasing the pressure on all governments to loosen the reigns, in favor of expediting innovation.

But should we feel encouraged by Meta’s quest for “superintelligence,” or concerned at the rate in which these tools are becoming so common in elements of serious potential impact?

That’s not to say that AI development in itself is bad, and there are many use cases for the latest AI tools that will indeed increase efficiency, innovation, opportunity, etc.

But there does seem to be some areas in which we should probably tread more cautiously, due to the risks of over reliance, and the impacts of such on a broad scale.

That’s seemingly not going to happen, but in ten years time, we’re going to be assessing this from a whole different perspective.

You can check out Common Sense Media’s “Talk, Trust, and Trade-Offs” report here.